Introduction

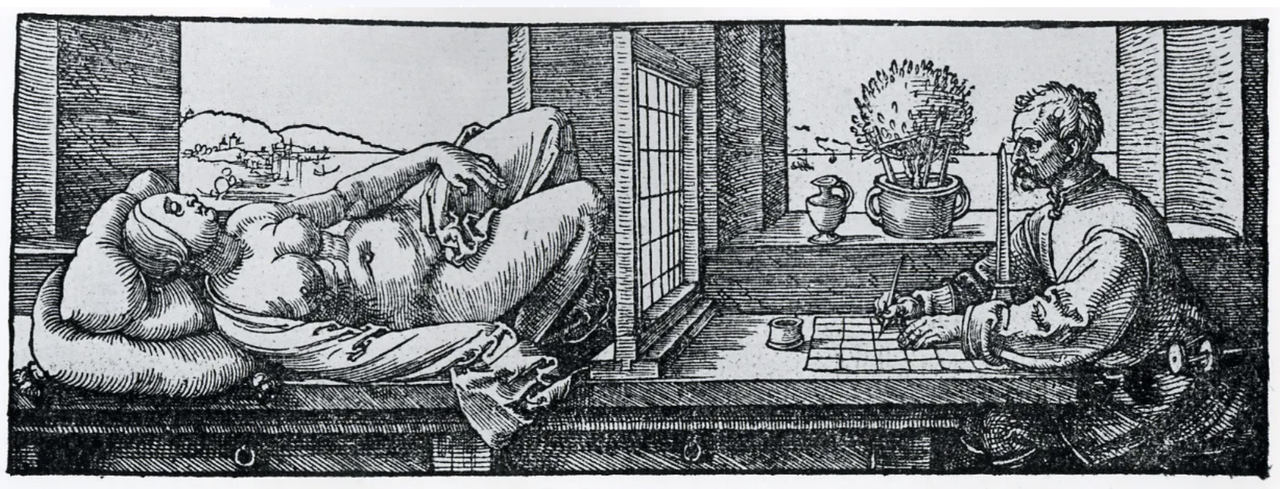

In the 16th century one innovative artist, Albrecht Düre created a “Perspective Machine” to help artists draw perspectives accurately. He did this by creating a screened 2D frame, between the artist and the drawing subject. The artist would then establish a line of sight from the artist’s eye through the 2D screen to any part of the drawing subject. In front of him was his drawing paper with a matching grid. (From Bob Duffy)

This technique for rendering an image by tracing the path of light through cells of a 2D image plane is called ray casting or ray tracing, and it’s how today’s advanced computer graphics got its start. (From Bob Duffy)

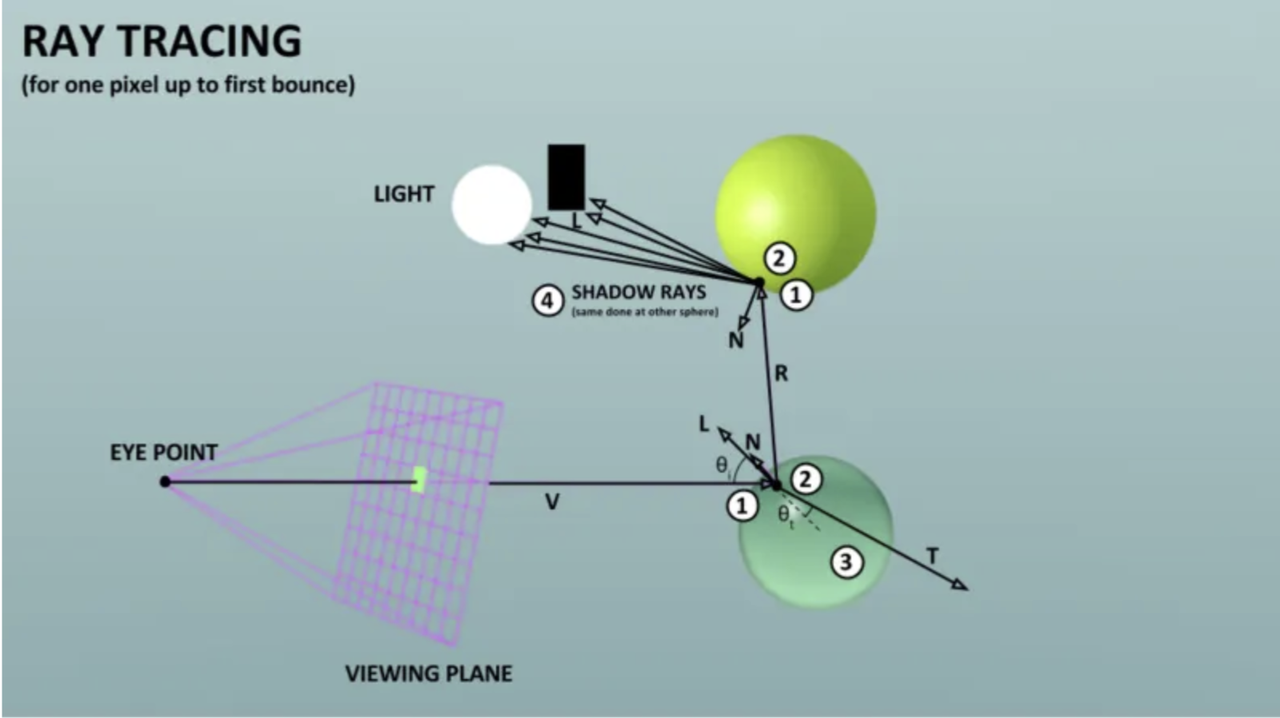

This is mimicking the physics of optics but in reverse. In our world, light rays start from the environment, bounce of objects, then land in our eye. For rendering, we project rays in reverse, because we only need the rays that land in the camera. (From Bob Duffy). This allows us simulate how light travels in the physical world. Due to reflection and refraction, we can even paths of simulate secondary and tertirary beams

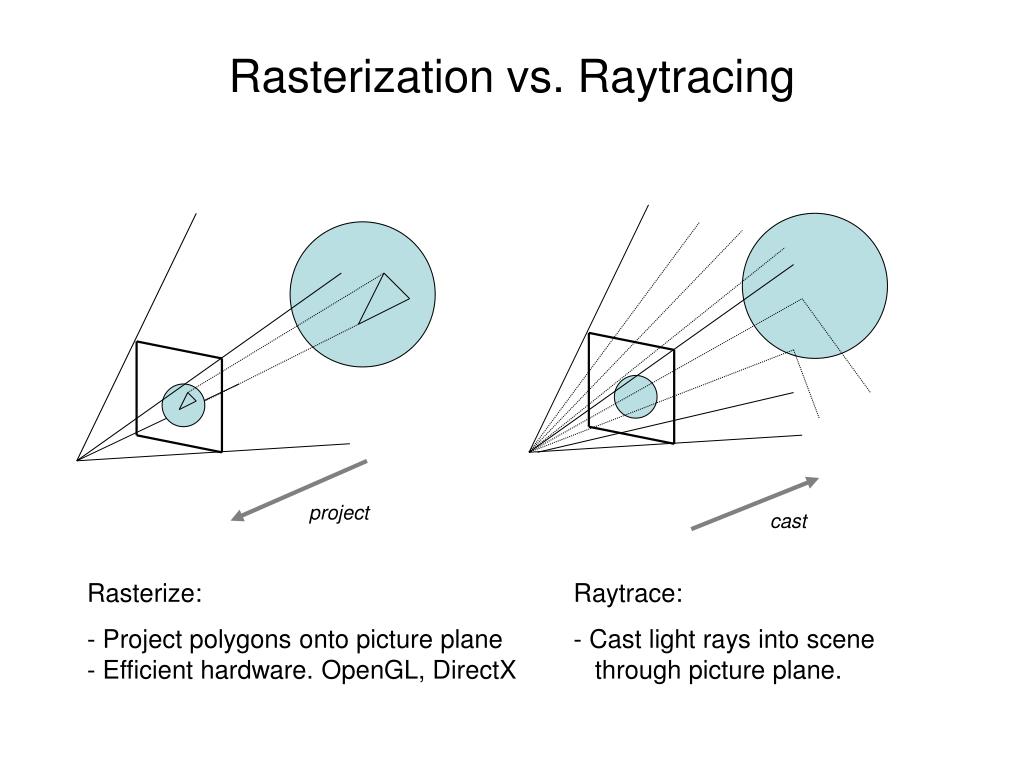

The issue about ray tracing is it’s too slow. Most 3D applications run at about 24–60FPS. A technique called rasterization is invented: an object is decomposed into triangles that intersect together.

The rasterization pipeline is in short as follows (A good video that explains the rasterization pipeline):

- Inputs: vertices (3D points) + triangle connectivity (triangle list). Optional: surface normals, UVs (2D position on the texture image for representing texture)

- Vertex shading:

- Coordinate transformation: Model → World → View (camera extrinsics) → Projection (camera intrinsics/perspective), this is called “clip space” (x, y, z, w). Clipping against view frustum happens here.

- Tesselation (optional): . It subdivides patches based on tessellation control/evaluation shaders;

- (Optional) Geometry shader: can add/remove/modify primitives.

- Primitive assembly: uses your triangle list/strip indices to form triangles.

- After projection you do perspective divide (to Normalized Device Coordinates, NDC) → viewport transform (to screen pixels).

- Rasterization: coverage test converts each triangle to fragments (candidate pixels); Rasterization turns triangles into pixels (fragments) and uses depth to resolve visibility

One issue with rasterization is it does not reproduce the shading effect very well. (Source: Simon Kang) For real-time ray tracing in video games, you’ll need advanced hardware. For gamers wanting that extra dose of reality, it may be a worthy investment.

Unlike Ray Tracing which simulates reflections and refractions, (volumetric) ray casting simply shoots one beam through a 3D volume. The goal is to accumulate color and opacity at each pixel. Surface Ray casting just shoots one beam and determines and returns if there’s hit /no hit from the surface volume (collision detection)